Inspirational Photo

I chose this image because I liked the idea of a statue covered in mist or fog some sort. The features I decided to implement as a result of this photo included homogenous volume rendering, subsurface scattering, and environment mapping. (Image from http://www.stephenpenland.com/photo/guardian/)

Volume Rendering

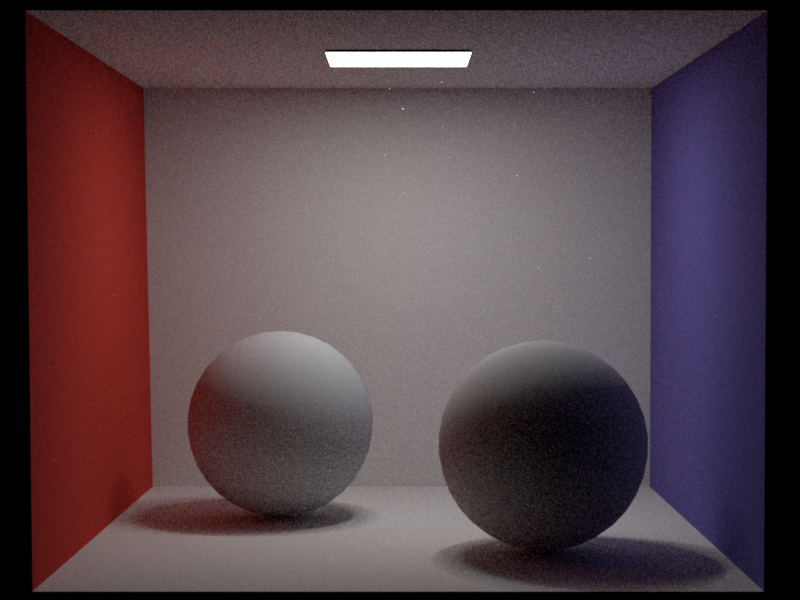

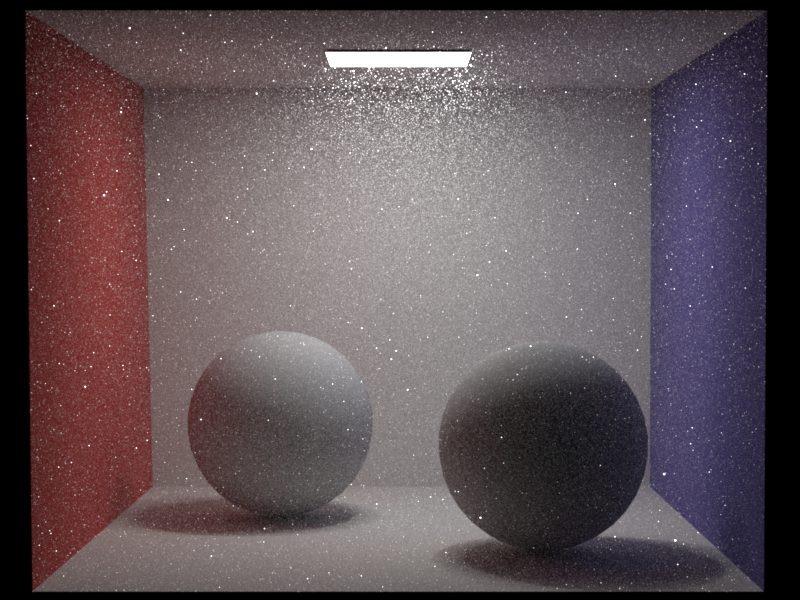

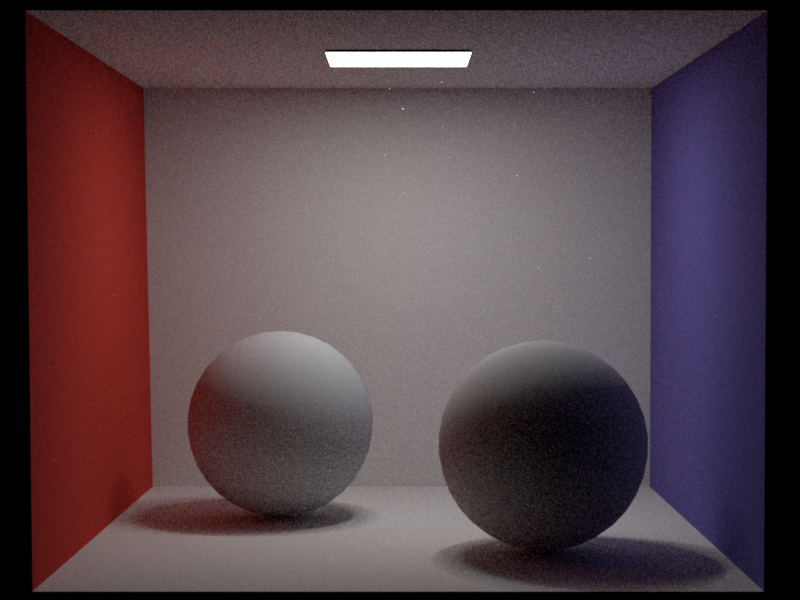

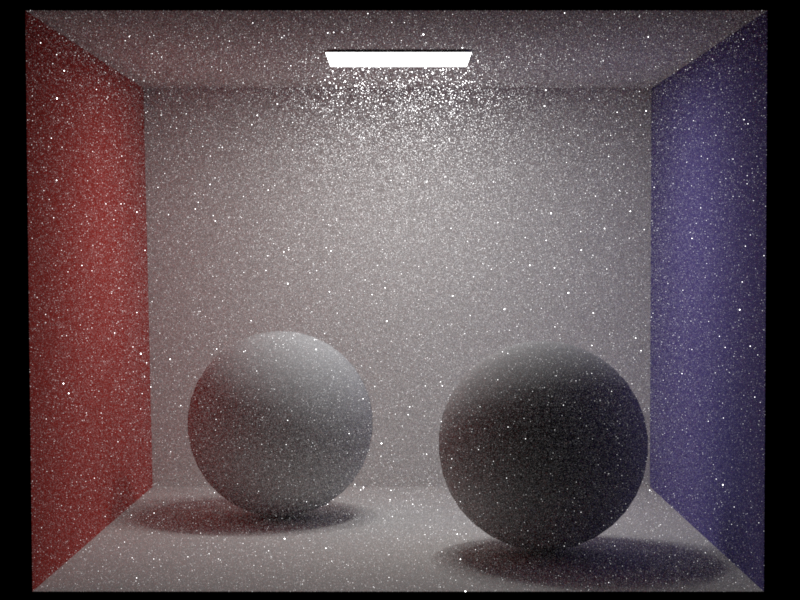

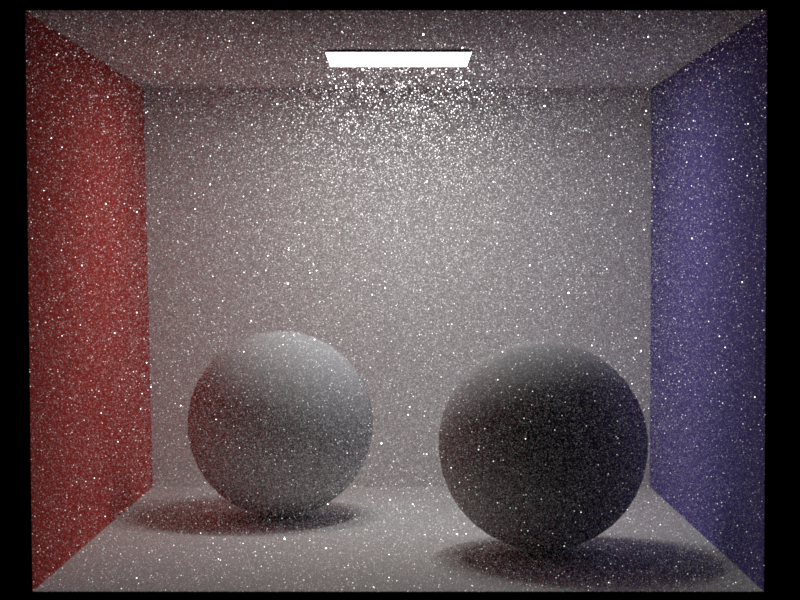

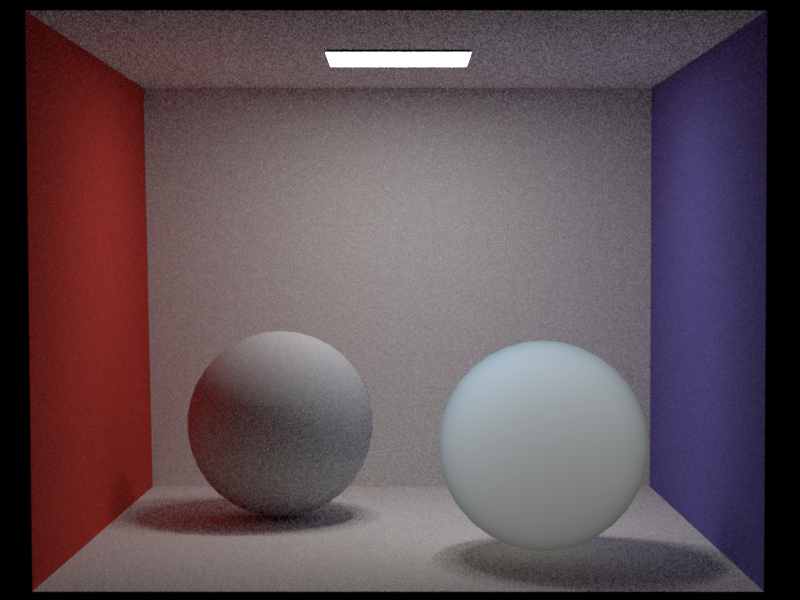

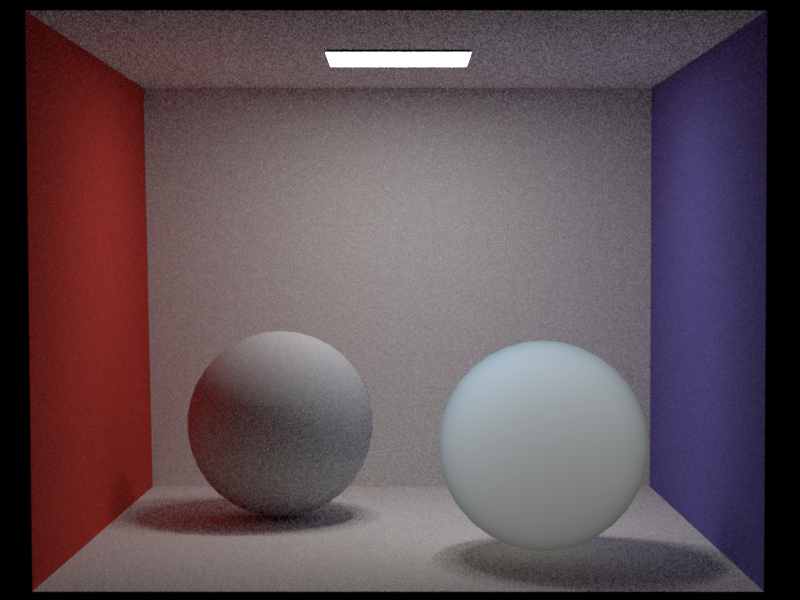

For volume rendering I implemented an integrator that was able to produce isotropic mediums defined by an extinction (\(\sigma_t\)) and scattering ( \(sigma_s\)) coefficient. For my implementation I allow the user to specify the extinction and scattering coefficients in the XML scene description. The path tracing is very similar to the path_mis integrator from earlier in the term, except now there are both surface and volume interactions. Each are handled seperately and we determine which interaction we have based on whether the mean-free distance we compute is closer than the nearest surface or not. I modeled my code largely after the PBRT method and the notes from class. I didn't run into any particular large challenges with implementing this particular algorithm. For testing my implementation, I used the Cornell Box from assignment four, but changed the sphere materials to both be diffuse. Below is a comparison of three images with varying (\(\sigma_t\)) and (\(\sigma_s\))

Cbox: Homogenous Volume Rendering (varied \(\sigma_t\) and low \(\sigma_s\)

Cbox: Homogenous Volume Rendering ( medium \(\sigma_t\) and varying \(\sigma_s\)

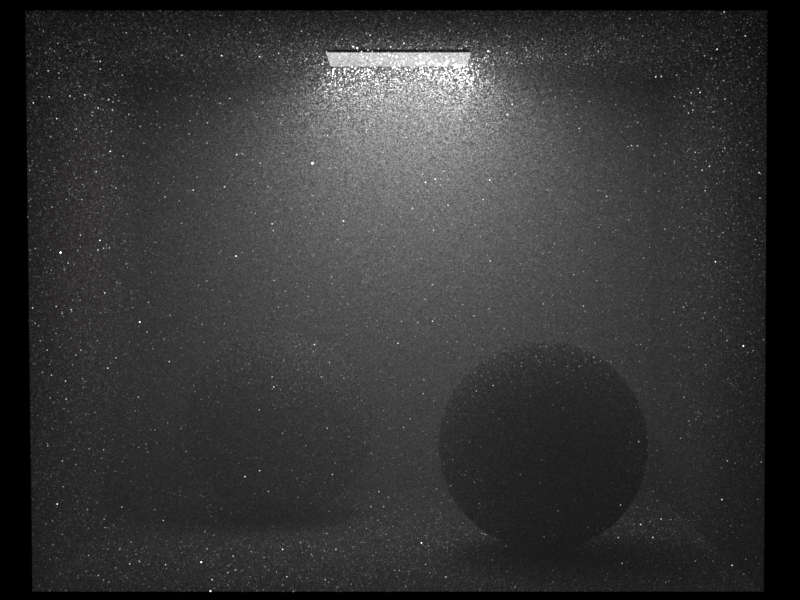

Validation

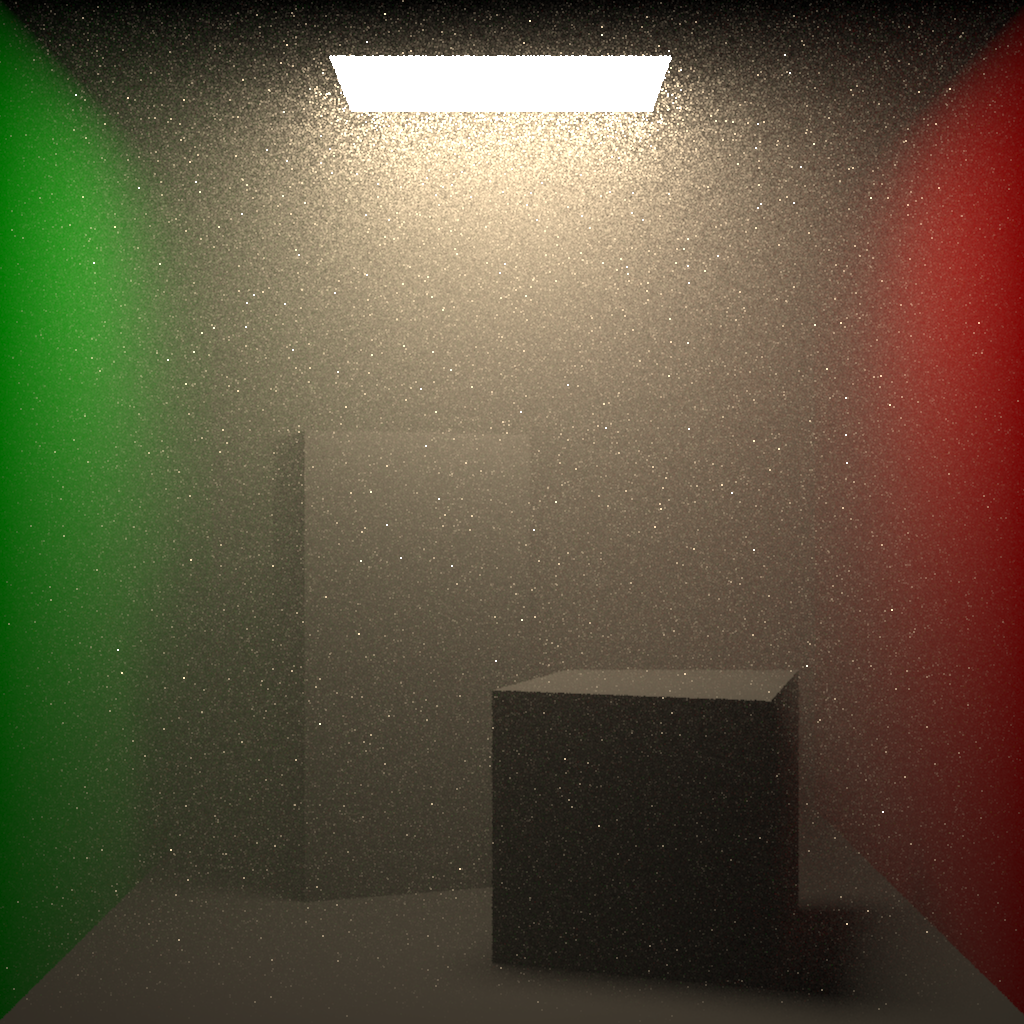

By examination of the spectrum of mediums produced, I considered the mechanics of the volume integrator to be working well. I found an image of another Cornell Box from a paper written by Professor Jarosz that compared volumetric path tracing to more advance techniques. The image below seems to have the same characteristic variation as the ones produced by my integrator

Image Rendered in a Paper Using Similar Technique

(Image from Jarosz, W., Donner, C., Zwicker, M., and Jensen, H. W. 2008. Radiance caching for participating media. ACM Trans. Graph. 27, 1, Article 7 (March 2008), 11 pages.)

Relevant Pieces of Code

- v_path_mats.cpp (misnomer, since uses naive mis implementation)

Subsurface Scattering

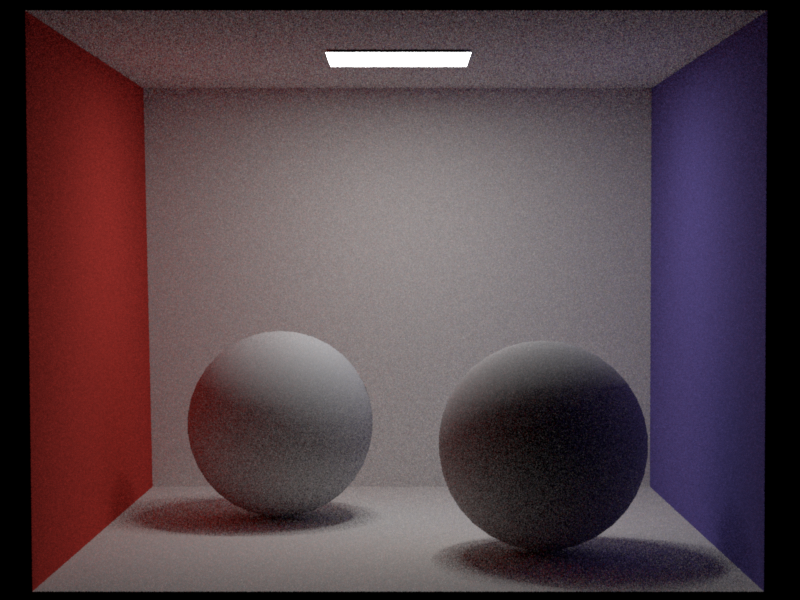

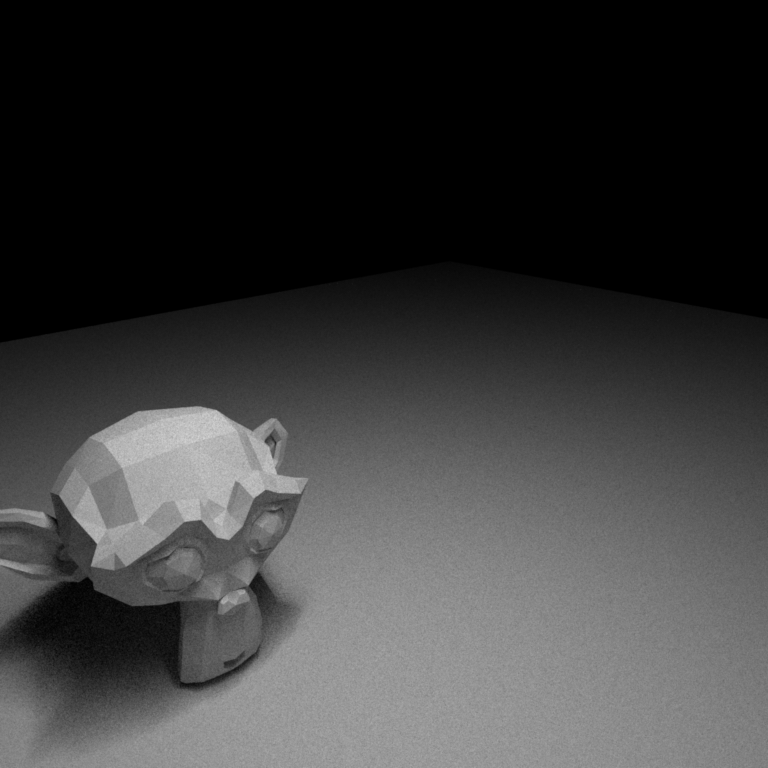

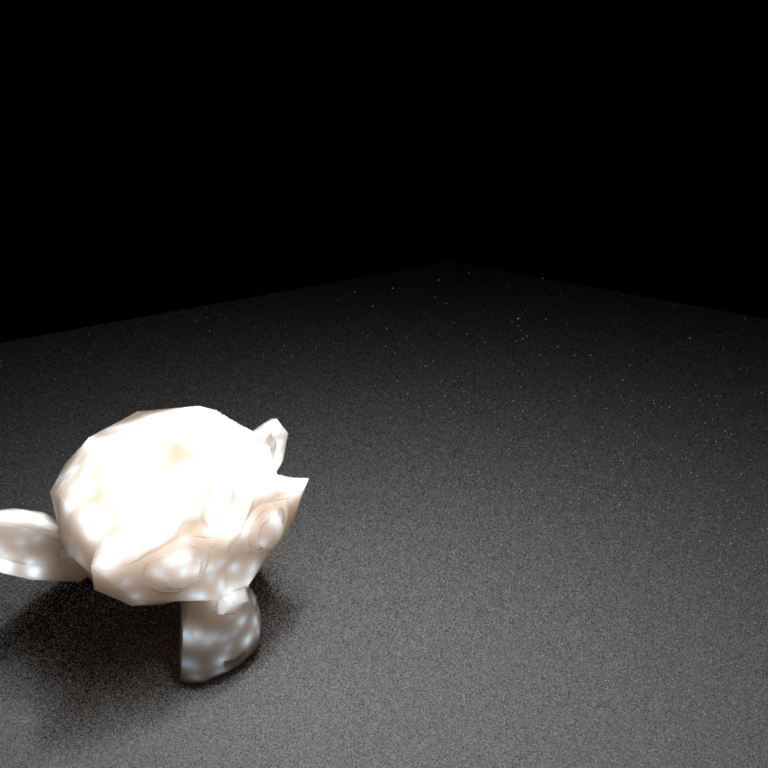

For subsurface scattering I read about the method outlined in "Physically Based Rendering" and then closely followed and implemented the method described in, "A Practical Model for Subsurface Light Transport," by Jensen, Marschner, Levoy, and Hanrahan. This method uses a two pass method consisting of irradiance caching and then (in my case) path tracing. I did not implement the hieracrhial evaluation method that uses an octree and is described in a later paper of Jensen's. Instead, I used a simplified vesrion that consisted of a KD tree and finding the cached irradiance points within a given radius. To generate the irradiance points, I used the uniform sampling methods generated in an earlier assignment and let the area of each cached point be equal to the total area of the mesh divided by the number of cached points on it. Each mesh got its own KD tree. Below is the Cornell Box scene, with one diffuse sphere and one marble sphere:

Cornell Box

Ajax Scene

Validation Tests

Real Marble Balls vs My Marble Sphere

Relevant Pieces of Code

- subsurface_scatter.cpp

- translucent.cpp

- CachedPoint.cpp

- CachedPoint.h

Environment Mapping (unable to complete)

I was really excited about implementing an environment map, but ended up running into large issues with loading images into c++. The default library given to us, stb_image, was unable to properly load any of the jpeg or png images that I tried. After spending a couple hours trying to incorporate other libraries into nori (libpng and CImg in particular) I was unable to complete this section. My code for the environment map and importance sampling is still written within the environment_light.cpp and complies (although is untested).

Relevant Pieces of Code

- enviornment_light.cpp

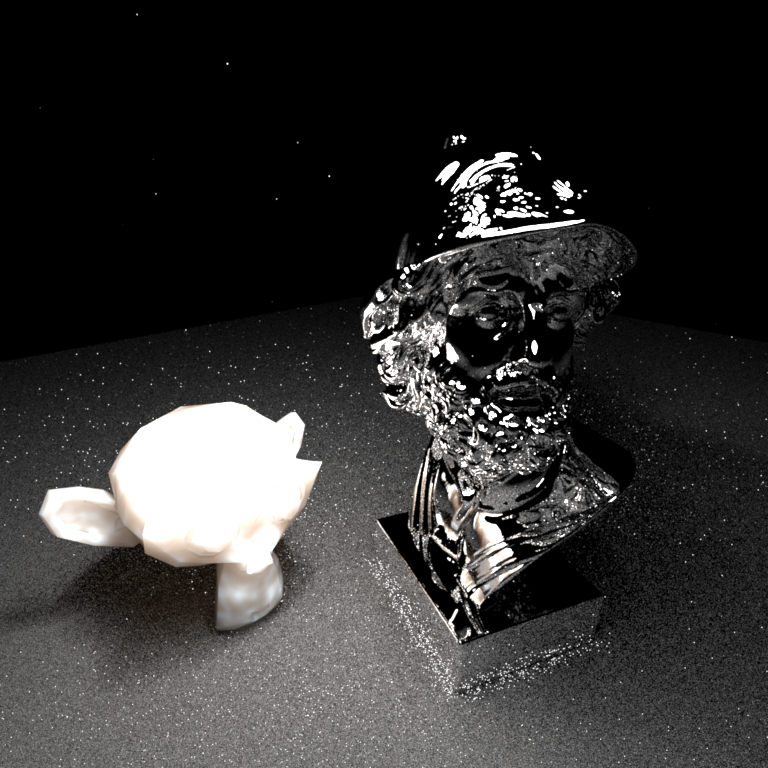

Final Image

For my final image I rendered a scene of a small marble monkey next to a mirrored bust. The difference in size, material, and in the form itself of the monkey and the more serious bust illustrate my theme of contrast. I included an image both with and without the the medium to show the objects themselves that were rendered.

Relevant Pieces of Code

- multi_integrator.cpp

Reflections

I originally decided to use path tracing for my homogenous volume because I tbought this would work better with the subsurface scattering integrator when I combined the two of them. In retrospect, path tracing was too inefficient and required large amounts of time to render volumes with lower variance. For subsurface scattering, I thought that I would be able to use a KD-Tree and just grab the cached points that were nearby (ignoring the ones that were further away). While this wokred well for the small objects I tested on originall (such as the sphere in the Cornell Box) it proved a less effective method for larger objects with more complex geometries. It also meant that I needed more cached points to render the images I did, which slows down the entire path tracing process. The environment map was the last component I attempted to implement and the technical challenges I ran into with importing images and lack of time prevented me from finding a solution to these problems. Overall, I was most proud of my subsurface scattering methods because I felt like this produced a very realistic result in my final image and was the most involved of the two techniques which I was able to implement.

Works Refrenced

- H.W. Jensen et al., “A Practical Model for Subsurface Light Transport,” Proc. Siggraph, ACM Press, 2001.

- HPharr, Matt and Humphreys, Greg, Physically Based Rendering, Second Edition: From Theory To Implementation. Morgan Kaufmann Publishers Inc, San Francisco, CA, US 2010