Lab Assignment 4 is due next Wednesday, May 21.

Sometimes instead of finding a single path or a single solution we want to find all solutions. A systematic way of doing this is called backtracking. It is a variant of depth-first search on an implicit graph. The typical approach uses recursion. At every choice point, loop through all the choices. For each choice, solve the problem recursively. Succeed if you find a solution; otherwise fail and backtrack to try an alternative.

Let's consider the N-queens problem. The goal is to place N queens on an N × N chessboard such that no two queens can capture one another. (Two queens can capture one another if they are in the same row, column, or diagonal.)

A truly brutish brute-force solution technique would be to consider all possible ways of placing N queens on the board, and then checking each configuration. This technique is truly awful, because for an N × N board, there are N2 squares and hence $N^2 \atopwithdelims() N$ possible configurations. That's the number of ways to choose N items (squares) out of N2, and it's equal to $\displaystyle \frac{N^2!}{N!(N^2-N)!}$. For an 8 × 8 board, where N = 8, that comes to 4,426,165,368 configurations.

Another, less brutish, brute-force solution takes advantage of the property that we must have exactly one queen per column. So we can just try all combinations of queens, as long as there's one per column. There are N ways to put a queen in column 1, N ways to put a queen in column 2, and so on, for a total of NN configurations to check. When N = 8, we have reduced the number of configurations to 16,777,216.

We can make our brute-force solution a little better yet. Since no two queens can be in the same row, we can just try all permutations of 1 through N, saying that the first number is the row number for column 1, the second number is the row number for column 2, and so on. Now there are "only" N! configurations, which is 40,320 for N = 8. Of course, for a larger board, N! can be mighty large. In fact, once we get to N = 13, we get that N! equals 6,227,020,800, and so things are worse than the most brutish brute-force solution for N = 8.

So let's be a little smarter. Rather than just blasting out configurations, let's pay attention to what we've done. Start by placing a queen in column 1, row 1. Now we know that we cannot put a queen in column 2, row 1, because the two queens would be in the same row. We also cannot put a queen in column 2, row 2, because the queens would be on the same diagonal. So we place a queen in column 2, row 3. Now we move onto column 3. We cannot put the queen in any of rows 1–4 (think about why not), and so we put the queen in row 5. And so on.

This approach is called pruning. At the kth step, we try to extend the partial solution with k − 1 queens by adding a queen in the kth column in all possible positions. But "possible" now means positions that don't conflict with earlier queen placements. It may be that no choices are possible. In that case, the partial solution cannot be extended. In other words, it's infeasible. And so we backtrack, undoing some choices and trying others.

Why do we call this approach pruning? Because you can view the search as a tree in which the path from the root to any node in the tree represents a partial solution, making a particular choice at each step. When we reject an invalid partial solution, we are lopping off its node and the entire subtree that would go below it, so that we have "pruned" the search tree. Early pruning lops off large subtrees. Effort spent pruning usually (but not always) pays off in the end, because of the exponential growth in number of solutions considered each time a choice is made.

NQueens.java solves the N-queens problem using pruning. The solve method is where pruning takes place. If the noConflict method returns false, then the partial solution has a conflict, and the entire search tree rooted at that partial solution is pruned. (The clone method makes a copy of the object it's invoked on. Try seeing what happens if we just call solList.add(partialSol).) The noConflict method is pretty slick in how quickly it determines whether there are conflicts in rows or diagonals.

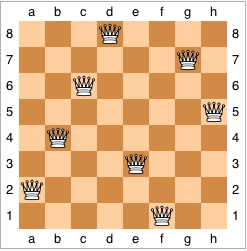

Here is one solution for N = 8:

There are three basic approaches for programming strategy games such as Nim, Chips, chess, and checkers. These games are called "games of perfect information" because both players know everything about the state of the game (unlike poker, bridge, and other card games, where you know your cards but not your opponent's). They also have no random or chance elements like backgammon or Monopoly, where rolls of the dice play a part in determining outcomes.

The first step is to come up with a strategy: a set of rules that tells you how to decide on your next move. For some games, there is a simple strategy that will allow you to win if a win is possible.

One such game is called Nim, where you start out with n sticks in a pile and two players. In each turn, a player is allowed to take between 1 and k sticks. The player who takes the last stick wins. This game has a simple strategy based on the quantity k + 1. If n is a multiple of k + 1, player 2 can always win. Think of pairs of turns, with player 1 and then player 2 taking sticks in each pair of turns. In each pair of turns, if player 1 takes m sticks, then player 2 takes k + 1 − m sticks to get to the next smaller multiple of k + 1. Thus, when we get down to k + 1 sticks, after player 1 takes m sticks, player 2 takes k + 1 − m sticks, emptying the pile, and winning.

What if n is not a multiple of k + 1? Then the shoe goes on the other foot. Player 1 can take enough sticks to leave a multiple of k + 1 sticks in the pile. Player 1 now follows the player 2 strategy described above. In this case, player 1 can always win.

Games that have a simple strategy are not very interesting to play. Fortunately there are many interesting games, such as chess, where no such simple strategy is known.

The second approach is game tree search, which is the classic approach used for playing checkers, chess, kalah, Connect 4, and similar board games. It tries all moves for player 1, all responses for player 2, all third moves for player 1, and so on, building up a game tree.

Unfortunately, the game tree search approach is hopeless for Go and other games with huge branching factors. (Go has 361 possible first moves, 360 replies to each of those, etc.) It also does not deal well with games that incorporate randomness, such as bridge or backgammon.

The third approach is Monte Carlo simulation. To decide what move to make, simulate thousands of random games that start from the current position, compute for each possible move the percentage of the games that started with that move and that ended up as wins. Choose the move that had the best winning percentage. This seems like a stupid approach, but if enough games are played, it works reasonably well.

There is a more sophisticated method, called Monte-Carlo tree search, that combines the two approaches. The strongest Go and bridge playing programs use this approach. This paper gives a two-page overview of the method, if you'd like to know more. (But please don't ask me about it. I'm not an AI guy.)

This approach can easily deal with random events such as die rolls or with imperfect information such as hidden cards. For random events, it simply simulates them as they arise in the game. For hidden information, it generates a random layout at the start (e.g., a random deal for bridge) and then simulates the game on that layout.

In our old introductory course, CS 5, we asked students to write a referee program for a variant of Nim called Chips. The game starts with a pile of n poker chips and two players. There are several versions. In one, player 1 takes between 1 and n − 1 chips. The two players take turns, and for each subsequent turn, the player can take between 1 and twice the number of chips that the player's opponent took on the previous turn. The winner is the player who takes the last chip, just as in Nim. If there is an easy strategy for playing this game, we don't know it. This makes it a more interesting game, and a candidate for game tree search.

The first step conceptually is to create the "game tree" of all possible games from the given position. The diagram below shows the game tree for Chips starting with 4 chips in the pile. On the first move it is possible to take 3, 2, or 1 chips. Each of these leads to a different game state. If you take 3 chips, then there is only 1 chip left, and so your opponent must take it. If you take 2 chips, then there are 2 chips left, and your opponent can choose to take 1 or 2 chips. If you take 1 chip, then there are 3 chips left, and by the rules of Chips your opponent can take 1 or 2 of them (at most double what you took last move). It is tedious, but in principle you could draw the entire game tree for any game of perfect information.

Here, the number in each node represents the number of chips untaken, and the number on each edge represents the number of chips taken in a move. The root is the initial game state. Nodes at odd depths represent outcomes of moves by player 1, and nodes at even depths represent outcomes of moves by player 2.

Once the game tree is drawn, it is necessary to evaluate it. At terminal nodes, the game is over. In Chips, terminal nodes have no chips left in the pile. In that case, you know the winner. It is traditional to label the game tree from the point of view of the player making the first move. Therefore, a node where player 1 wins is labeled W, and a node where player 1 loses is labeled L. A terminal node at an odd depth is labeled W, since it means that player 1 took the last chip, and a terminal node at an even depth is labeled L, since it means that player 2 took the last chip.

Once the terminal nodes are labeled, we can then evaluate the internal nodes, starting from the bottom of the tree and working to the root (the top node). A node at an even depth (with the root having depth 0) is a move that player 1 can make. Therefore, if either of its children are labeled W, then we label that node with a W, since there is a winning strategy for player 1. Otherwise, if all children of a node at even depth are labeled L, we label the node with an L, because player 1 has no winning strategy. We reverse the situation for nodes at odd depth, which are moves that player 2 can make. Here, if any child is labeled L, we label the odd-depth node with an L, since player 2 has a winning strategy. Otherwise, we label the odd-depth node with a W, since player 2 has no winning strategy.

In this manner, we can label all nodes in the game tree with a W or an L. If the root node is labeled with a W, then player 1 can win and should chose any move that leads to a node labeled W. If the root is labeled L, then player 1 cannot win against the best play by player 2. All player 1 can do is choose a move at random or a move that will prolong the game as much as possible—assuming that player 2 plays optimally.

This approach is implemented in the files Chips.java, Game.java, Player.java, HumanPlayer.java, and ComputerPlayer.java.

The Game class records the state of the game. It keeps track of the size of the pile, the maximum move that can be made by the next player, and two Player objects. It also keeps track of which player should move next. It provides methods to make a move, test if the game is over, determine the winner, copy itself, and return various pieces of information about the state of the game.

Note that Player is an abstract class, and it keeps track of the player name and the number of chips that a player has taken. It provides methods to get and update this information. It also requires non-abstract classes that extend it to implement methods to make a copy of this player object and to get the move to make.

We choose to make each player either a HumanPlayer (which asks the user for a move) or a ComputerPlayer (which picks moves using game tree search). This is why I put the getMove method in the Player rather than elsewhere. Each player can use a different method of picking a move.

The Chips class supplies the high-level control of the game. It creates the players and creates the initial Game object. It then enters a loop that continues until the game is over. In this loop, it describes the state of the game, gets a move from the player whose turn it is to move, and reports the move. When the game is over, it prints the final state and the winner.

The rules for this game are a bit different from the one described above. The initial number of chips must be an odd number, at least 3. Player 1 can take between 1 and n/2 of the chips, and the winner is the one who takes an even number of chips. However, this program can be changed to last wins by changing a few things in the Game class. Specifically:

maxMove from chips/2 to chips-1 in the constructor.getWinner to use the new rule for deciding the winner.inputInitChips method to not require the number of chips to be odd.Everything else is unchanged.

The game tree search is done in the recursive function pickBestMove. This deceptively simple function is as follows:

private static int pickBestMove(Game state) {

// Try moves from maximum first looking for a winning move

for (int move = state.getMaxMove(); move > 0; move--) {

// Don't want to change current state, so make copy

Game newState = state.makeCopy();

newState.makeMove(move); // Make the move on the copy made

if (newState.isOver()) {

if (newState.getWinner() == newState.getOtherPlayer())

return move; // Winner was player who just made move

}

else if (pickBestMove(newState) == 0) // No winning move for other player?

return move; // If other player can't win, then I can

}

return 0; // No winning move found, so current player loses

}This function tries all legal moves, from maxMove down to 1. For each move, the function first copies the state of the game into newState by calling the makeCopy() method. It then calls newState.MakeMove(move). This makes the move that is being considered.

After making the move, there are two possibilities. If the game is over, then we figure out who wins and return this move if it is a winner for the player currently moving. (Note once the current player finds a winning move, there is no reason to search further, so we can just return this winning move.)

If the game is not over, then we call newState.PickBestMove(). This asks for the best move for the opponent, because newState holds the state of the game after the move was made. If the opponent has no winning move, then this is a winning move for us and we return it.

If we exhaust all the moves without finding a winning move, then this is a losing position for us and we return 0 to indicate that.

This approach works fine for Chips with about 30 chips, but if you try it for 100 chips, you will wait a long time for the computer to decide its move. The reason is that the game tree grows exponentially with the number of moves. For a game like chess, we could easily write a program to search the entire game tree, but it would not be practical.

John McCarthy at Stanford, a pioneer in AI (and once a Dartmouth math professor), defined "computable in theory, but not in practice" as follows. If

then the computation is not practical. McCarthy computed that doing a game tree search for chess is not practical in this sense. Even if an entire game of chess could be evaluated in the time it takes light to cross a hydrogen atom, there are so many possible games that the computation would not complete in the projected life of the universe.

So what can we do instead? We look ahead a certain number of moves, and then estimate who is winning. This estimate is called static evaluation. Here "static" means without looking ahead. For chess, it would depend on who had more pieces (and more valuable pieces), how mobile the pieces were, how well protected the king was, the pawn structures, and other similar considerations.

There is a tradeoff between doing an accurate static evaluation and depth of lookahead. An accurate but slow static evaluation can be worse than a fast but less accurate evaluation. In the 1970's there was a chess program called Chess 4.2. It incorporated a lot of chess knowledge, but was mediocre in tournaments. Its authors ripped out most of the chess knowledge and concentrated on making it fast and it became national champion, because looking ahead a few moves was more useful than having an accurate evaluation of a static position.

The static evaluation of the game is then used in game tree search evaluation. Instead of just W or L, each node is assigned a number corresponding to the score. In games such as Go, where you not only win or lose but there is a measure of by how much you win or lose, you would use scores in this way even if you evaluated the entire game tree, so that you could find the biggest win. Scores are assigned in terms of player 1. Scores for player 2 would be the negative of scores for player 1.

Each terminal node (or node where the look-ahead depth is exhausted) is given a score. On moves where it is player 1's move, that player will choose the move to maximize the score. On moves where it is player 2's move, that player will choose the move to minimize the score. (Alternatively, player 2 can maximize the negatives of player 1's scores.) The following picture shows a game tree for this sort of game, with integer values instead of W and L for values:

This approach of looking ahead a fixed number of moves is not a perfect solution. It works well when the static evaluation function is fairly accurate, but in dynamic situations it often is not that accurate. An example arises in chess. If you capture your opponent's queen, that is good, because the queen is the strongest piece. If, however, on the next move your opponent captures your queen, then you have exchanged queens, which leaves the relative strengths of the two sides approximately where they were before the exchange took place.

However, consider what happens if you are looking ahead 5 moves, and on the last of those 5 moves you capture your opponent's queen. Your opponent will capture your queen on the sixth move, but that is beyond what your lookahead can see. Therefore the situation looks very good, and you might choose a move that leads to this situation rather than one that is in practice a much better move, because it leads to a real rather than an illusionary gain. This problem is called the horizon effect. The bad event of your queen getting captured is beyond the look-ahead horizon, so that it is invisible and for your program does not exist.

It is even worse than this. It may be that it is inevitable that your queen will be captured (perhaps it is pinned or trapped). However, if giving away a pawn (or a bishop or knight or rook) pushes the capture over the horizon, your program will begin to behave very irrationally. It will compare the result of losing the queen if it plays normally vs. losing a rook and will decide to sacrifice the rook. But this does not prevent the capture of the queen, and so the program will lose the rook and then still have to lose the queen.

To avoid this problem, chess-playing programs try to extend their lookahead depth when the situation is dynamic (pieces in threat of being captured, etc.). The problem with this idea is that they then can go on looking ahead many moves and run out of time without ever deciding on a move.